Introduction

Automatic Chord Recognition (ACR) is the task of transcribing chords from music that uses the twelve-tone equal temperament system. The building block of all music is a note. A note is a discrete musical sound. As with all sounds, it is a vibration in the air that our ears pick up. Musical notes can be empirically measured as frequencies. The interval between two musical notes, where one note has double the frequency is defined as an octave. The musical system that splits up each octave into twelve discrete musical notes where the frequency for each adjacent note increases logarithmically is defined as the twelve-tone equal temperament system. Virtually all modern music and music from Europe in the past have been written in the twelve-tone equal temperament system. A chord is simply a collection of musical notes played in unison.Being able to recognize abstract musical features such as chords in a song, where other musical elements exist such as percussion and melody, has been a central research area in the field of music information retrieval. One of the limiting factors of ACR are the limited datasets. Each song in a data set requires a group of professional musicians manually transcribing the chords and its corresponding time segment. The most comprehensive data set contains around 1000 songs and raw audio files are not easily accessible to use due to copyright issues [7].

Motivation

This problem of transcribing chords is of great importance to musicians. Having a list of chords for a song allows musicians to play and share music, either alone or with other fellow musicians, in an effective manner. This can be observed by the sheer popularity of lead sheets, a musical notation that contains the chords and the melody of a song, such as the Real Book which a staple in the jazz community. However, transcribing music is no trivial task. It requires professional musicians with years of training and a typical song takes around 8 to 18 minutes to transcribe, with complicated songs taking up to an hour. Furthermore, musicians have noted that it is infeasible to transcribe more than a dozen songs in a single day because of the sheer amount of concentration needed [7]. Thus an automated process is appealing to musicians of all levels alike. Even without perfect accuracy, musicians can make corrections in the automated transcription which can be beneficial.

Related Work

ACR systems in the past have found success by limiting the size of its vocabulary, i.e. the amount of type of chords a system can detect. The size of a system’s vocabulary has been an important benchmark of state of the art systems. The current state of the art system [2] applies Constant-Q transform, similar to a Short Time Fourier transform but uses a logarithmic window function due to the fact that the frequency of notes in the twelve-tone equal temperament system are logarithmic which allows to higher resolution of musical frequencies, to the digital music input, which can be visualized via a spectrogram. This distribution of frequencies is used as input for a Convolutional Recurrent Neural Network. This network contains 9 Convolution layers and a Bi-directional Long Short-Term Memory layer. The latter layer allows for temporal context to be taken into account and prevents our model from switching chords too frequently. Finally, a linear output layer outputs the predicted notes in a song and a dictionary is used to determine the final chord prediction.

Proposed Method

Music Source Separation

Music source separation another research area in music information retrieval where an audio file of a song is split into its constituent components. For example, a song file is split up into two files where one contains only the vocals and another containing only the instruments. In early 2020, a music source separation model called Demucs has been open sourced by Facebook Research [3]. This model was the state of the art in 2019 and performs remarkably well.

Novel Input Data

Input data for ACR systems is the Constant-Q transform of an audio file. However, there has not been a huge effort to process these music files before computing its Constant-Q transform. Applying a music source separation model to extract only the components of a song that contribute to its chord is a novel method for input data.

Purpose

The purpose of this project is to empirically determine how much non chord elements, such as percussion and melody, impact current state of the art ACR systems. I hypothesize that ACR systems that are trained on songs that have these non chord elements removed will perform better than systems that have been trained with the traditional method. This is because removing non chord elements seem intuitively analogous to how computer vision system prepossess their data to gain better performance through methods such as image segmentation and noise reduction.

Experiments

Data and Preprocessing

1217 unique songs were used Humphrey and Bello dataset [1]. The Demucs model produces four separate tracks from a single audio track: Bass, Drums, Other, and Vocals. The other track contains most of the instruments that is associated with playing the main chord of the song such as the guitar and the piano. The other and bass tracks were combined into a single audio track using the amix filter in by ffmpeg. The Constant-Q Transform for each song was computed using the librosa [5] package with a sample rate of 22050 and a hop length of 512.

Models

Two models were trained, one with the Constant-Q Transform computed from the songs processed with Demucs and one with the Constant-Q Transform computed from the original dataset. 60% of dataset was randomly selected for training and 20% of dataset was randomly selected for validation and testing each. The model architecture used is by Jiang, et. al [2].

Evaluation Metric

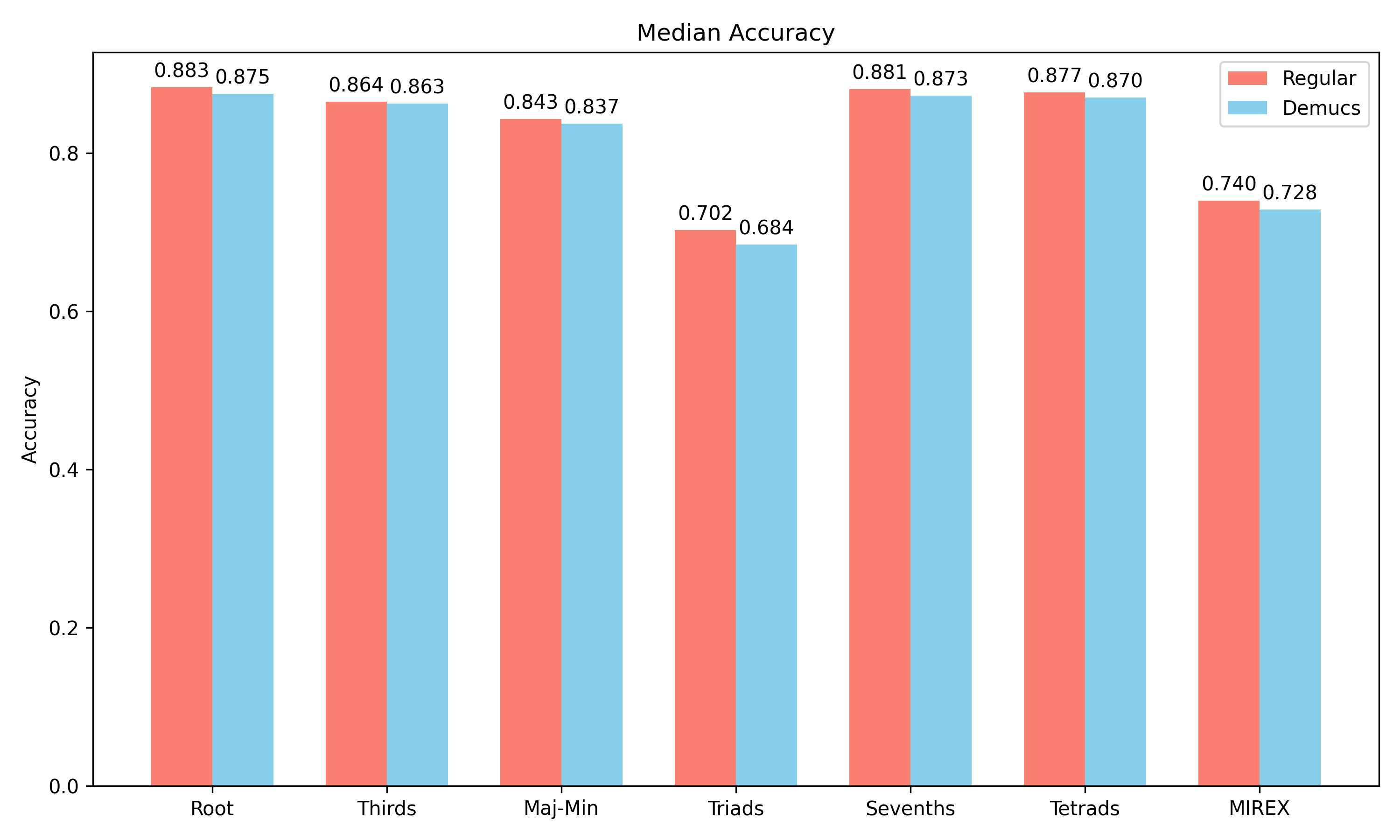

The following metrics were used to evaluate our models using the mir_eval [6] package: Root, Thirds, Maj-Min, Triads, Sevenths, Tetrads, and MIREX.

Results

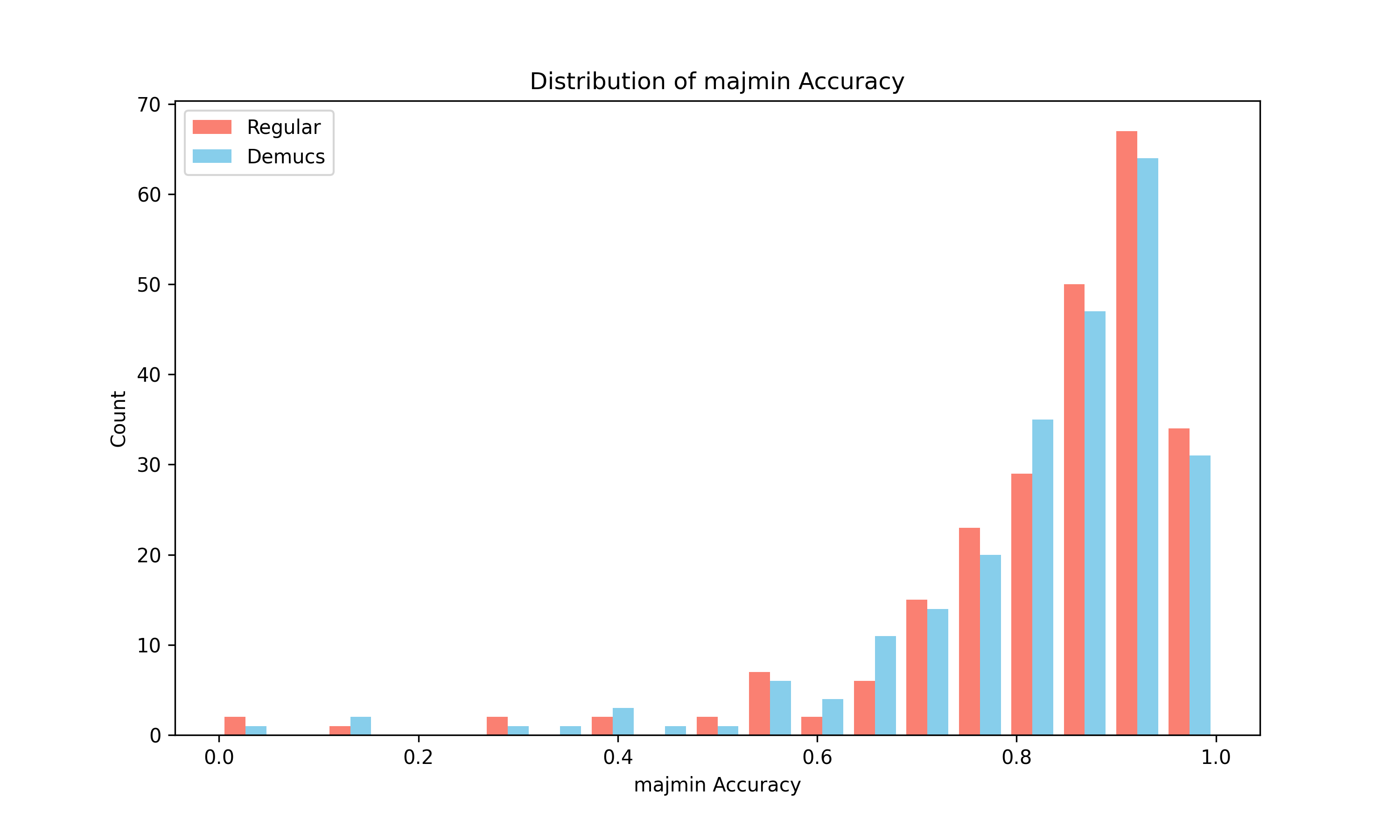

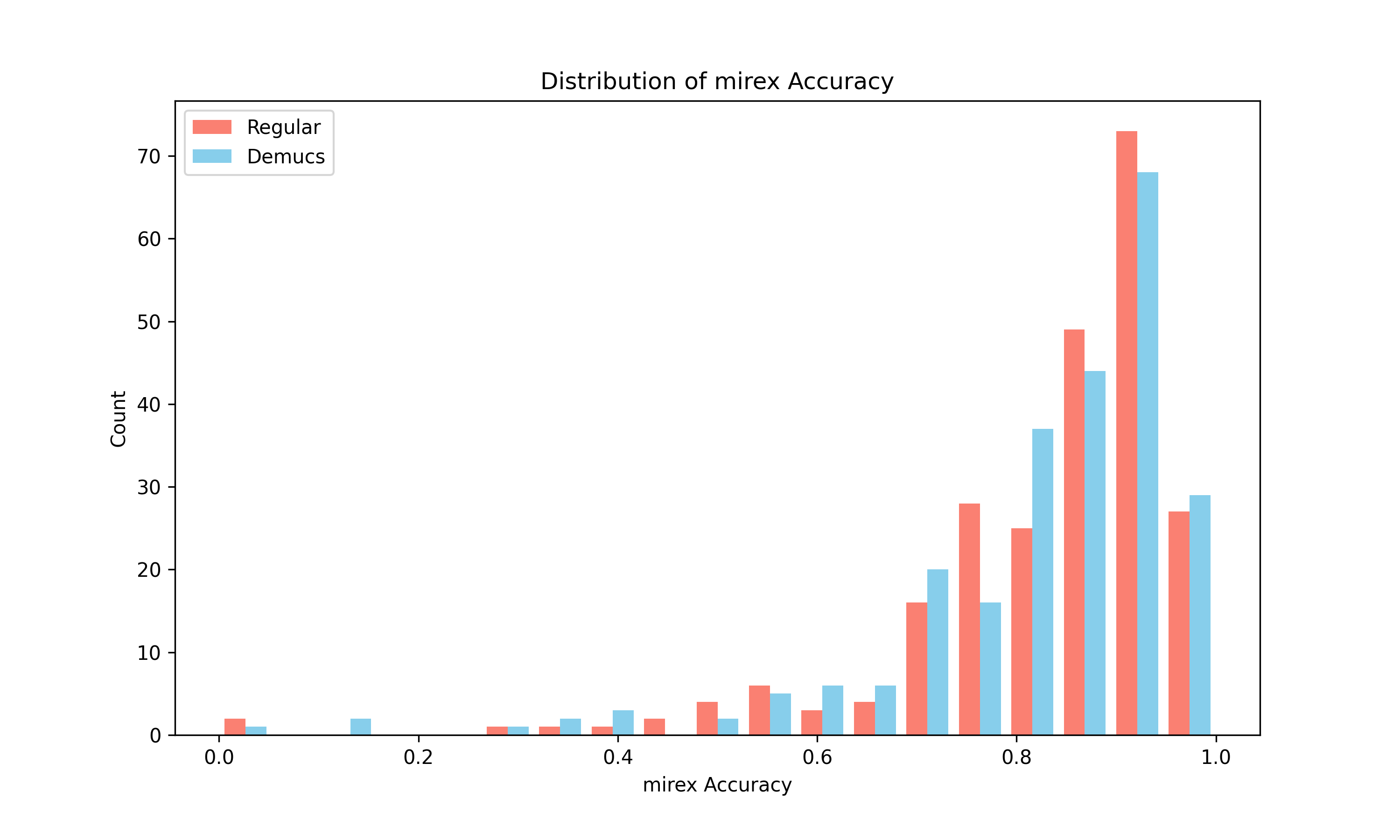

It was observed that on average across all metrics, the Demucs model performed worse than the model that was trained using the original dataset. The distribution of both models was observed to be a right skewed normal disruption across all metrics.

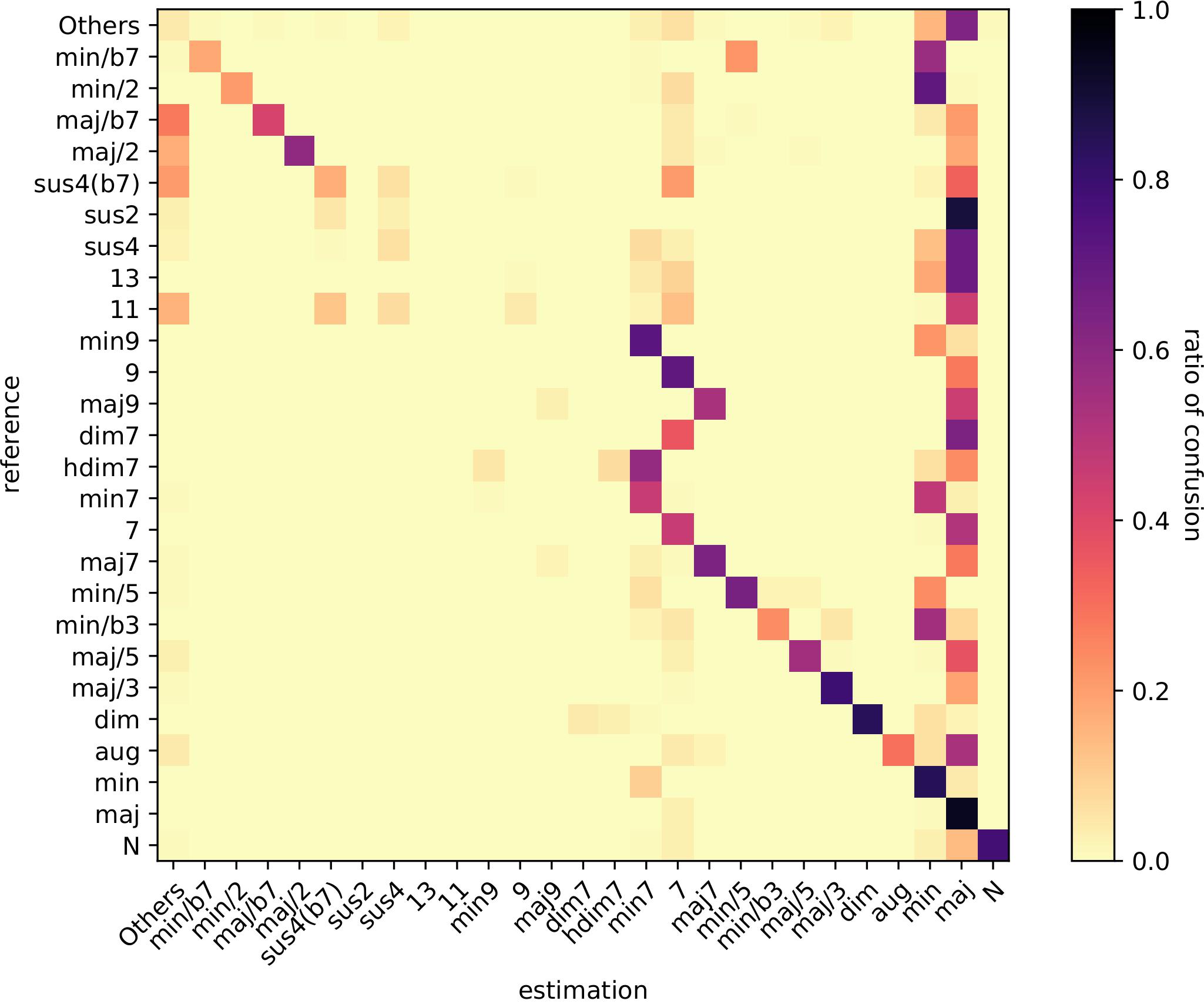

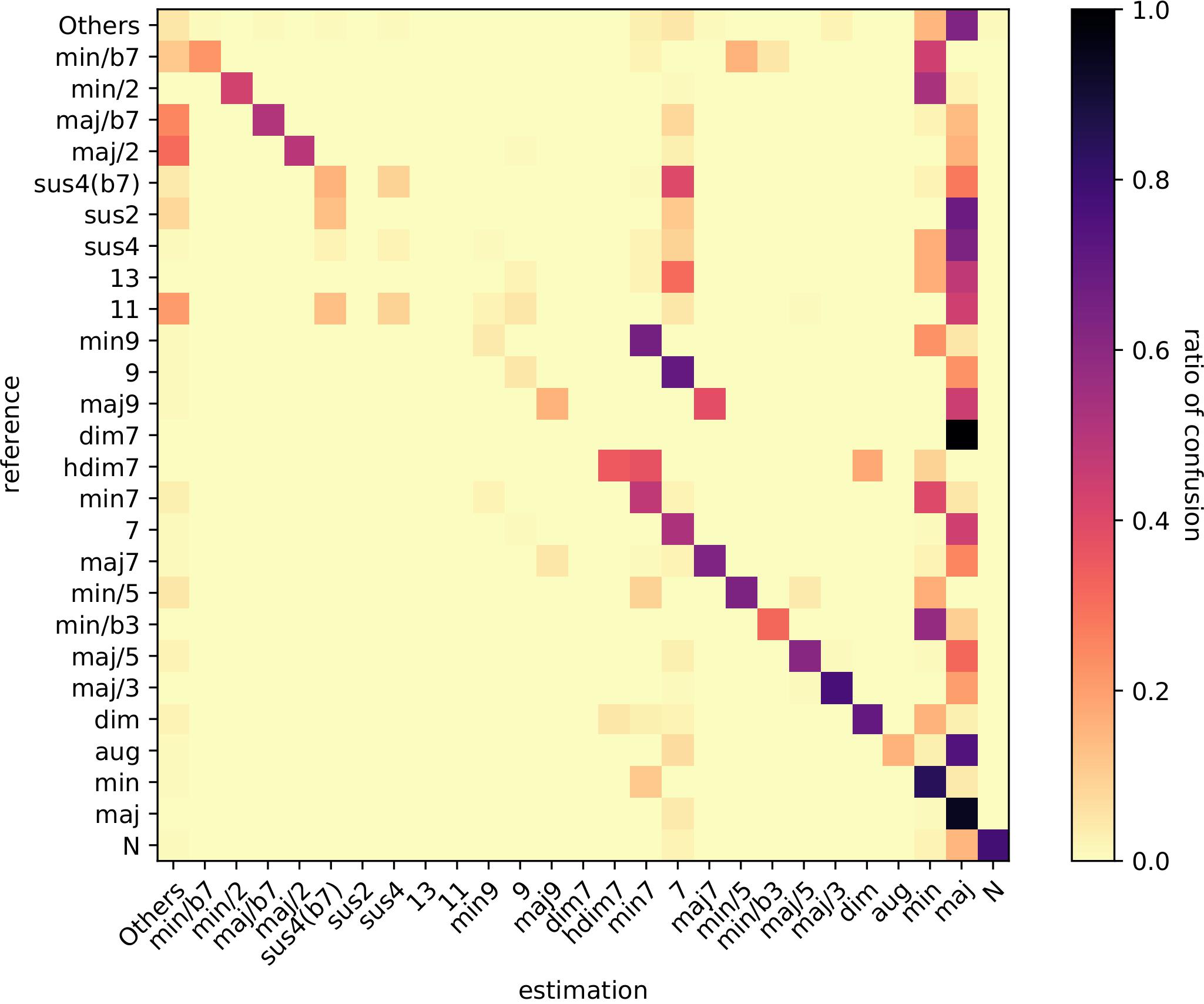

Both models were able to predict major and minor chords exceptionally well, and were able to predict 7th chords and of similar complexity at a good level.However, more complicated chords were confused for its corresponding base triad.

Median Accuracy

Distribution of Root Accuracy

Distribution of majmin Accuracy

Distribution of mirex Accuracy

Distribution of Sevenths Accuracy

Confusion Matrix of Demucs Model with Demucs Dataset

Confusion Matrix of Model Training with Original Dataset

Discussion of Challenges

Problems with the original dataset

I have originally planned to use the McGill Billboard dataset [7], however I have quickly found out that this would be too difficult. They provide precomputed chroma vectors, but these are not compatible with Demucs, the music source separation model I will use. I tried to manually download the audio files from the provided metadata using Youtube-DL but I found out that a large amount of different versions of each song exist (cover songs, same song on different albums, etc) and there is no good way to find the correct song. Since the dataset contains over 1000 songs, finding the exact audio file used in the dataset is impossible to do manually or by a simple automation script

New Dataset

I have chosen to use the Humphrey and Bello dataset [1], the same dataset used by Jiang, et al. [2]. This dataset is slightly larger than McGill Billboard dataset and actually contains parts of the McGill Billboard dataset. I reached out to the MARL lab and they were kind enough to provide me a copy of the raw audio files and its corresponding chord labels.

Computational Problems with Music Source Separation model

Another challenge I have faced is running Demucs on the dataset. Demucs does support GPU acceleration, however it requires 8GB of DRAM on your GPU and my personal GPU is not powerful enough to support GPU acceleration. Demucs also supports just using the CPU, however this is extremely slow. It takes on average 2 minutes to process a single song, and with a dataset with over 1000 songs, this would take around 33 hours to process the entire dataset. If I want to tweak some Demucs settings on my dataset, I would need another 33 hours of compute time. To overcome this computational barrier, I have reached out the Center for High Throughput Computing and I was able to get access to their GPUs.

Conclusion

In this paper, we have proposed a novel way of training ACR systems by using music source separation. Our experiments have showed that the model trained with song computed from music source separation performed worse on average compared to a model trained on the original dataset.

The poor performance of the music source separation model may be due to the fact that the separated audio tracks contain artifacts. Each song had a varying degree of artifacts, ranging from unnoticeable to barely listenable. To empirically show the effect of source separation on ACR systems, the original audio stems can be used to train a model. However, currently there are no dataset with original audio stems/components and its corresponding chord labels.

It is not clear if music source separation has no effect on the particular model used in this paper or if music source separation has no effect on ACR systems in general. It is possible that the model used in this paper is sufficiently deep enough such that music source separation has no effect. Shallower ACR models may be able to achieve higher performance while reducing training time by using music source separation. Further study on the relationship between a large range of ACR systems and music source separation is required to understand the relationship between these two systems.

Given more time and compute resources, this experiment should be run again using k-fold cross validation. This paper only trained the two models once. It is possible that the random selection of songs caused the difference in performance between the two models. K-fold cross validation will allow for a fairer comparison between the two models and allow us to determine if the results obtained was caused by random chance.

Acknowledgments

This research was performed using the compute resources and assistance of the UW-Madison Center For High Throughput Computing (CHTC) in the Department of Computer Sciences. The CHTC is supported by UW-Madison, the Advanced Computing Initiative, the Wisconsin Alumni Research Foundation, the Wisconsin Institutes for Discovery, and the National Science Foundation, and is an active member of the Open Science Grid, which is supported by the National Science Foundation and the U.S. Department of Energy’s Office of Science.

The author would like to thank Junyan Jiang for providing a reference implementation for his ACR model and NYU’s Music and Audio Research Laboratory for providing the dataset needed to perform this research.

References

[1] Eric J. Humphrey, Juan P. Bello. “Four Timely Insights on Automatic Chord Estimation”, 16th International Society for Music Information Retrieval Conference, 2015

[2] Junyan Jiang, et al. “Large-Vocabulary Chord Transcription via Chord Structure Decomposition”, 20th International Society for Music Information Retrieval Conference, Delft, The Netherlands, 2019.

[3] Alexandre Défossez, Nicolas Usunier, Léon Bottou, Francis Bach, “Music Source Separation in the Waveform Domain”, ffhal-02379796f, 2019

[4] J. Pauwels and G. Peeters, “Evaluating automatically estimated chord sequences”, 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, 2013

[5] McFee, Brian, Colin Raffel, Dawen Liang, Daniel PW Ellis, Matt McVicar, Eric Battenberg, and Oriol Nieto. “librosa: Audio and music signal analysis in python.” In Proceedings of the 14th python in science conference, pp. 18-25. 2015.

[6] Colin Raffel, Brian McFee, Eric J. Humphrey, Justin Salamon, Oriol Nieto, Dawen Liang, and Daniel P. W. Ellis, “mir_eval: A Transparent Implementation of Common MIR Metrics”, Proceedings of the 15th International Conference on Music Information Retrieval, 2014.

[7] John Ashley Burgoyne, Jonathan Wild, and Ichiro Fujinaga, ‘An Expert Ground Truth Set for Audio Chord Recognition and Music Analysis’, in Proceedings of the 12th International Society for Music Information Retrieval Conference, ed. Anssi Klapuri and Colby Leider (Miami, FL, 2011), pp. 633–38